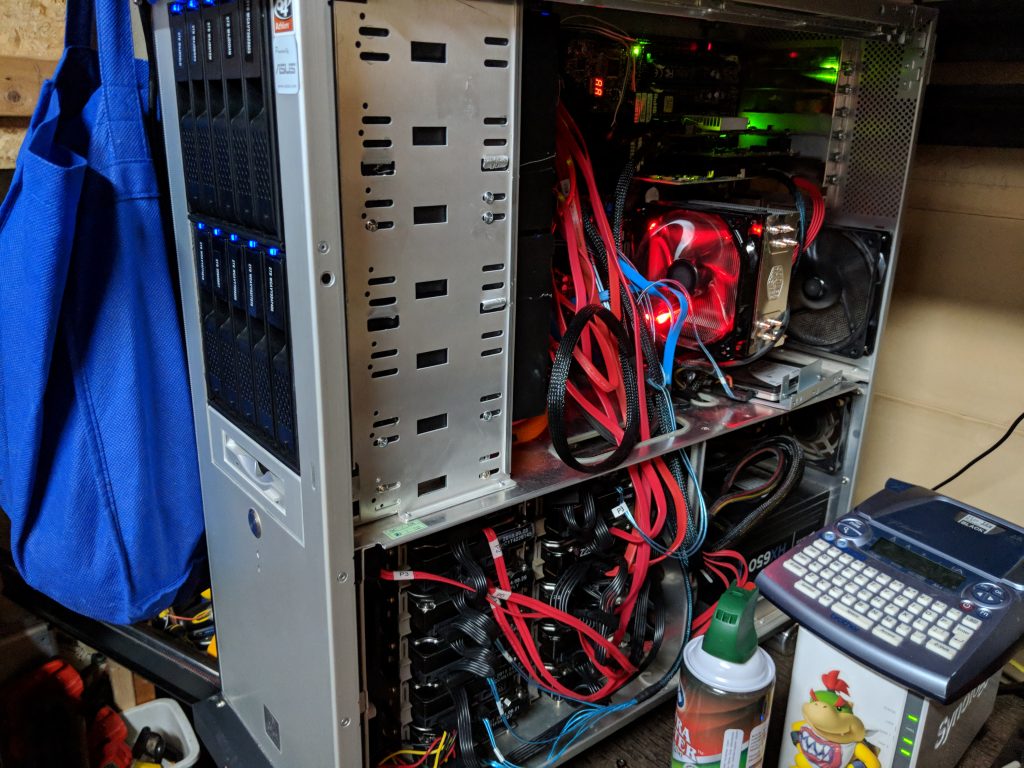

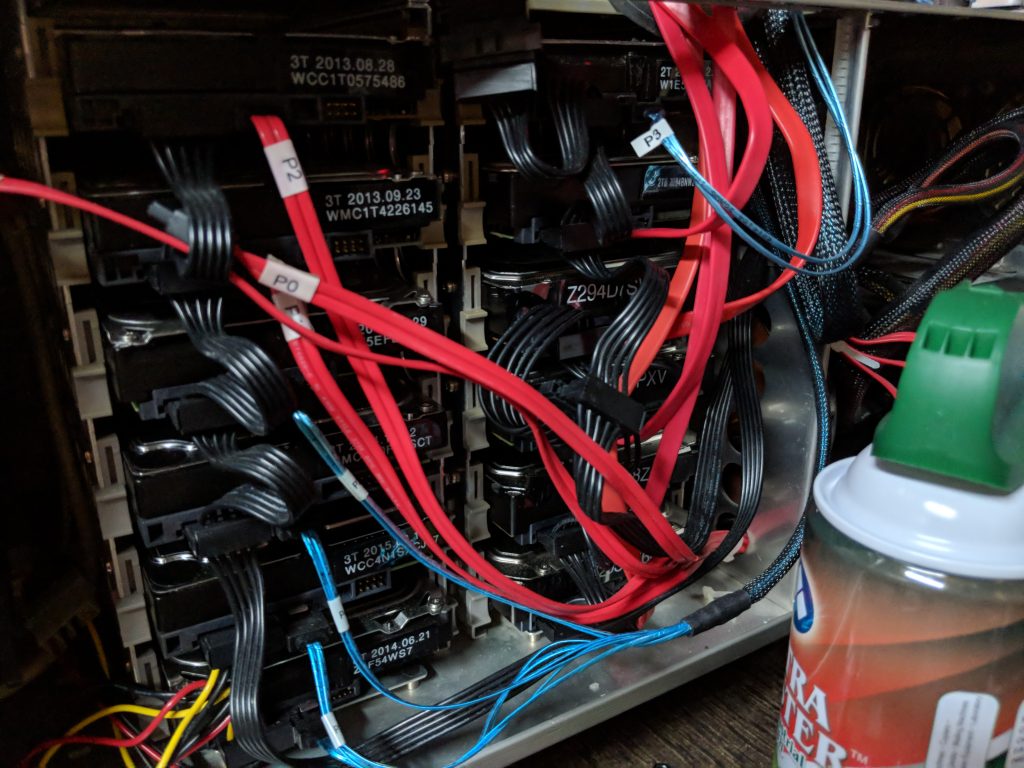

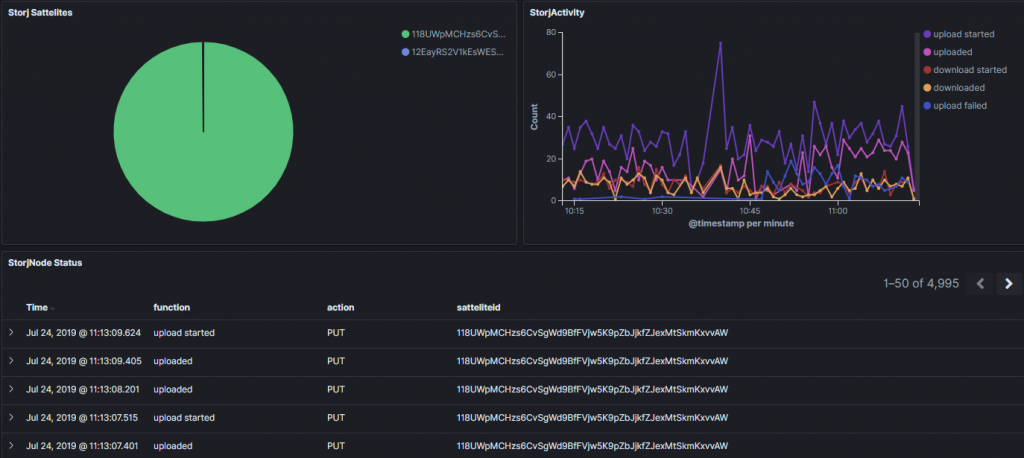

I’m starting my storj node operator (SNO) adventures during their alpha, and I am enjoying gathering statistics and whatnot –

This post is devoted to the adventure – Right now, it is just a few scripts and stuff I am using:

Here is my LogStash entry for Storj Docker logs:

if [container][name] == "storagenode" {

mutate {

add_tag => ["StorageNode"]

}

}

if "StorageNode" in [tags] {

mutate {

gsub => ["message", "\r\n", "LINE_BREAK"]

}

grok {

patterns_dir => "/etc/logstash/patterns"

match => [ "message", "%{STORJPARSE}" ]

}

mutate {

replace => [ "message", "%{message}" ]

}

}

And here is what i am currently doing to GROK that stuff:

FUNCTION (download(ed| failed| started)?|upload(ed| failed| started)?)

DELETE (deleted)

STORAGEPARSE %{TIMESTAMP_ISO8601:time}%{SPACE}%{GREEDYDATA}%{SPACE}piecestore%{SPACE}%{FUNCTION:function}%{SPACE}{"Piece ID"\: "%{DATA:pieceid}", "SatelliteID": "%{DATA:satteliteid}", "Action": "%{DATA:action}"}%{GREEDYDATA:message}

CCANCEL %{TIMESTAMP_ISO8601:time}%{SPACE}%{GREEDYDATA}%{SPACE}piecestore%{GREEDYDATA}: infodb:%{SPACE}%{DATA:action},%{DATA:function}%{GREEDYDATA:message}

CCANCEL1 %{TIMESTAMP_ISO8601:time}%{SPACE}%{GREEDYDATA}%{SPACE}piecestore%{SPACE}%{FUNCTION:function}%{SPACE}%{SPACE}{"Piece ID"\: "%{DATA:pieceid}", "SatelliteID": "%{DATA:satteliteid}", "Action": "%{DATA:action}", "error": "%{DATA:action_error}"%{GREEDYDATA:message}

RPCCANCEL %{TIMESTAMP_ISO8601:time}%{SPACE}%{GREEDYDATA}%{SPACE}piecestore protocol: rpc error: code = Canceled desc = %{SPACE}%{DATA:action},%{DATA:function}%{GREEDYDATA:message}

STORJDELETE %{TIMESTAMP_ISO8601:time}%{SPACE}%{GREEDYDATA}%{SPACE}piecestore%{SPACE}%{DELETE:function}%{SPACE}{"Piece ID"\: "%{DATA:pieceid}"

STORJPARSE (?:%{STORJDELETE}|%{RPCCANCEL}|%{CCANCEL1}|%{CCANCEL}|%{STORAGEPARSE})